May 4, 2015 20 min read

Testing Employees After Training: Best Practices for Workforce Training Assessment

Industry:

Solution:

Companies put a LOT of money, time, and hard work into training their employees. New employee onboarding, job role training, succession training, compliance training–you get the picture.

In many or most cases, that training includes some element of testing. People in learning and development often refer to this testing as “assessment” or “level 2 evaluation” (to learn why, read our article on the Kirkpatrick 4-level Evaluation Model). We’re going to try to use the word “test” as much as possible in this article, though we’ll fall back on assessment from time to time.

The problem many trainers face is that after putting so much work into planning and creating the training materials, it can be easy to give short-shrift to the test part. And that can be a BIG problem because you don’t really know if your employees are learning what they have to learn if you’re not testing in one way or another. So you may be providing training that’s not effective for some, many, or all of your employees and never know it. And that’s why we’re going to focus on tests in this post–testing employees after training. Hope you find some stuff interesting and helpful.

You’ll find that this information includes a lot of high-level, more general information about testing. As a result, you may also find yourself interested in the following, more specific articles too:

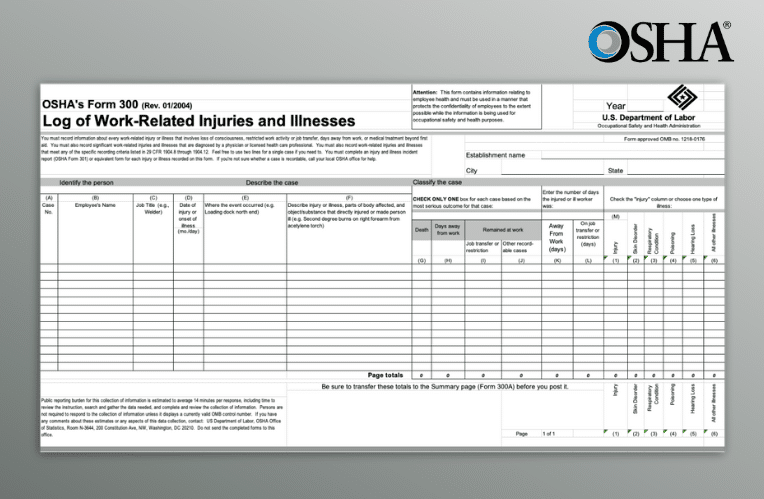

Vector EHS Management Software empowers organizations – from global leaders to local businesses – to improve workplace safety and comply with environmental, health, and safety regulations.

Learn more about how our software can save you valuable time and effort in recording, tracking, and analyzing your EHS activities.

Learn more about how we can help:

- Incident Management Software →

- EHS Inspection Software →

- Key Safety Metrics Dashboard →

- Learning Management System (LMS) and Online Training Courses →

- Mobile Risk Communication Platform

Download our EHS Management Software Buyer’s Guide.

Aligning Job Performance & Behaviors, Learning Objectives, and Tests

Let’s begin by talking about some things that are closely related: the desired on-the-job performance of your workers, your learning objectives, and your tests.

Job Performance & Behaviors: What You Want Employees To Do On the Job

This should go without saying, but it’s a fundamental concept and so it’s worth starting here. You provide training because you want people to know things they need to know for the job, or because you want them to be able to do things they need to do on the job. In other words, you want to prepare them to use their knowledge and skills at work to perform job skills.

So that’s the first part of the puzzle. Figure out what it is that you want employees to know or be able to do on the job.

Learning Objectives

After you’ve come up with a list of the things you want your employees to know or be able to do on the job, it’s time to create learning objectives for your training materials.

We could write a much longer blog post about learning objectives. In fact, we have–check here and here. And there’s more to say than that, too, so you might want to download our free guide to learning objectives.

Although there’s a lot to say about creating learning objectives, we can boil it down to a few key points, and we have below.

Tips for Creating Learning Objectives

The basic idea of a learning objective is that it’s a simple, clear statement of what the employee should be able to do when the training is over. Here are some characteristics of a well-written learning objective:

- It’s something the worker has to do or know on the job

- It’s written as a performance–either something the employee does to demonstrate knowledge (don’t create learning objectives that include words like “know” or “understand” because that’s not something the employee can “do”), or it’s written as something they do to demonstrate a skill

- It’s written in a clear, non-ambiguous manner so that it’s easy for anyone to tell when the employee has satisfied the learning objective

- It may include not only a performance but also conditions–for example, not just “tighten a screw” but “tighten a screw using a wrench”

- It may include not only a performance but also a standard–for example, not just “detect a defective roll” but “detect defective rolls 100% of the time during an hour of production time”

Hopefully that short list of bullet points helped. If you want to know more about learning objectives, check out those links above. Otherwise, we’ll move on to show how your learning objectives and your assessments should be related.

Matching Your Learning Objectives and Your Tests

This next point may be from the School of Obvious, but once you’ve determined what your employees need to know or do on the job, and then you’ve created learning objectives to match, you must create tests that match your learning objectives as well. In other words, create tests that allow you to effectively determine if your employees have met those learning objectives.

You’ll do this by creating one or more test items for each learning objective. By test item, we mean one part of your test. For now, think of this as a question within the test, or perhaps a skill demonstration.

Be careful at this phase, because it can sometimes be easy to create a test question that doesn’t really assess the learning objective. For example, the learning objective might be “Perform procedure X” and the question would be “List the steps of procedure X in order from first to last.” But listing the steps of a procedure is different than performing the procedure. This issue is addressed in more detail in our separate article about workplace tests and fidelity.

Creating Tests that Mimic Realistic Actions & Decisions Workers Will Perform on the Job

One of the most important things to keep in mind is that the questions you ask in your test (or the behaviors you ask employees to demonstrate, etc–the “test items”) should as much as possible directly match the behaviors you want employees to perform on the job.

So, if you want to teach employees to operate a machine, an ideal test or assessment would be to go into the field and ask them to demonstrate that they can operate the machine. If you can’t do that, perhaps you could set up a simulator that allows them to “mimic” a demonstration of operating the machine, or a more traditional elearning course that allows them the ability to operate the machine.

Or, if you have to create a test question in a format like multiple choice, you can avoid writing questions that are based on recall of knowledge and information (example question: “what is this called?”) and instead write a question that presents a scenario or decision point and ask the worker to select the best possible decision or action for that scenario. Read more on scenario-based learning here.

Just remember–try to make the test item as similar to the real-world, on-the-job skill, behavior, or performance as possible.

Testing Employees After Training: Knowledge Tests and Task- or Skill-Based Tests

You can test workers in many different ways, but generally they break down into two categories:

- Knowledge tests

- Task-based tests (also known as skill-based tests or performance assessments)

Let’s take a look at each. (See Note 3.)

Knowledge Tests

Knowledge tests are generally used to determine if your employee knows something or can apply that knowledge as opposed to whether or not they can perform a task. Knowledge tests include:

- True/false questions

- Multiple-choice questions

- Multiple-response questions

- Matching questions

- Drag and drop questions (some)

- Fill-in-the-blank questions

- Short answer questions

- Essay questions

In short, it’s kind of a combination of the stuff you might have done in school and some stuff you might do in basic eLearning courses.

Your knowledge tests should include one or more test items for each learning objective. Again, in common terms, this means one or more questions per learning objective.

You may find these articles helpful:

- Writing multiple-choice questions

- Writing true/false, matching, dragging, and other types of questions

A quick tip about knowledge-based tests before you move on. There are legitimate reasons why you might want to teach employees knowledge and then test them on their understanding of that knowledge. For example, this can be important in compliance training. However, in most cases at work, the goal of training is to help employees apply knowledge successfully on the job to perform job tasks (not simply prove they know something).

As a result, when you’re sitting down to create a knowledge-based assessment, always ask yourself if that’s really what you want to do, or if you’re just being a bit lazy and you’re wrongly creating a test about knowledge when you really should be creating a test about the application of that knowledge that mimics or duplicates a real-life experience employees would face at work. If it’s the later, there are many ways to recast your question so it’s closer to the decision and performance that workers will have to complete on the job.

Task- or Skill-Based Tests (Performance Assessments)

A task-based test is a test of your worker’s ability to actually perform a real job task in a real work environment (or a realistic simulation of the work environment). The idea is that your workers will actually perform the task or skill, not simply say, recall, select, or list as they would in the knowledge tests listed above.

In some cases, your task-based test will ask your employees to perform the skill in real life. In other cases, your task-based test will ask your employees to perform the skill in some form of simulated environment. For example, airplane pilots are tested in sophisticated flight simulators, and I’ve seen similar simulators for crane operators. In other cases, the simulation may be something a little more simple, like an e-learning course that presents a work-like scenario and then asks the worker a question like “what would you do in this situation?”

Your task-based tests will require your workers to perform one or more test item for each learning objective. Or, in more common terms, to complete one or more performance/behavior for each learning objective.

You’ll typically have a supervisor evaluate the performance of the employees performing the task-based test. In those cases, you’d create a checklist or some form of rating scale that they can use to record their evaluations. Remember it’s important that all employees being evaluated on their performance of a task be evaluated in a fair, uniform, objective manner, so it’s important that all evaluators know the criteria for a successful performance of the task and apply that criteria.

Of course, in the simulation and scenario-based e-learning examples discussed earlier, a computer will record the results for you. Click to read more about using scenario-based learning and assessments.

When To Use Knowledge Tests and When to Use Task-Based Tests

So now that you know you’ve got two big forms or types of assessments, the obvious question is which should you use when?

As you might have guessed, you answer this by going back to the learning objective that your making the test for. When we discussed learning objectives above, we said they should always be written in the form of a performance or a behavior. For example, they should include verbs like state, list, match, select, operate, construct, etc.

But even though the learning objective is ask for a behavior in the form of a verb, if you look closely, you can see some of the verbs are asking the employees to perform a behavior that demonstrates knowledge (these are the words like state, list, select, and match) and others are asking the employees to perform a behavior that demonstrates a skill or the ability to perform a task (operate, construct, build, etc.).

So, in general, you can use a knowledge test or test item (such a a true/false or multiple-choice question) to test workers on their ability to satisfy a learning objective that asks for a performance that demonstrates knowledge (the objectives that ask for things like state, recall, list, match, select). And you can use a task-based test or test item (such as “turn the machine on” or “thread the materials through the machine”) to test workers on their ability to satisfy a learning objective that asks for a performance that demonstrates that they have a given skill or can perform a given task. But remember the caution provided earlier about using knowledge-based assessments when a task- or skill-based assessment would be more appropriate.

Here’s a list that may help:

| What You Want Employees to Do | Testing Method | Example |

|---|---|---|

| Repeat facts | Knowledge | State, recall, list, etc. |

| Explain concepts | Knowledge | Discuss, explain, etc. |

| Apply understanding of a process to perform a job task | Task-based | Do, build, etc.–something performance-based |

| Perform a procedure | Task-based | Do, perform, etc.–something performance-based |

| Find and/or analyze information | Task-based | Find, analyze, apply, etc.–something performance-based |

| Determine proper course of action given specific circumstances | Task-based | Do the next thing/step given these circumstances (or you might do this as a test assessment–explain the next thing/step) |

| Perform a job task (create, build, construct, assemble) | Task-based | Build, construct, assemble, etc.–something performance-based |

| Apply a principle | Task-based | Apply principles from training to react appropriately in given scenarios, etc.–something performance-based. Note: an example of this is a sales person making a sales demo/pitch to a various of “mock” customers during a training exercise |

| Troubleshoot | Task-based | Diagnose and fix a problem |

If you’re trainer’s “spidey sense’ is tingling because this is all seeming a bit familiar, it’s because it is similar to the hierarchy of learning objectives that Benjamin Bloom is famous for.

Creating Your Tests

Now let’s begin looking at the actual process of creating your tests and the test items within them.

When to Create Your Tests

MANY experts in instructional design and/or learning and development will tell you the best time to create your tests is right after you’ve created your learning objectives. That’s right–after you’ve created your learning objectives but BEFORE you’ve created your training content/activities.

This may seem weird, funny, strange, or counter-intuitive to you. It did to me the first time I heard it. But it’s worth giving it a shot. Here are a few reasons why this makes sense:

- You just created the learning objectives, so they’re fresh in your mind. Now’s the time to create those tests–while the fire is hot. Remember your goal in creating tests is to make sure your employees can satisfy the learning objectives, so this linkage makes sense.

- If you create your training materials first and then create your training materials before you create your tests, you run the risk of letting something in the training materials pull your test off-target a bit.

So, even if this sounds strange, why not give it a try and see how it works for you?

Before You Create Your Tests

Before you begin creating your tests, it’s worth your time to create a plan. While planning, consider the following issues:

- For each learning objective, what kind of test items do you need to create–a knowledge test or a task-based test?

- How many test items should you create in total? To determine this, know that you’ll need at least one test item for every learning objective. Then, you may decide to create more than one test item for some or all of the objectives. For example, some objectives may be more important than others–if so it’s OK to create more test items so you’re sure the workers can perform them. Or, your worker may have to perform a skill in different situations on the job–if so, it’s OK to create different test items for the performance of the skill in each of the different situations.

- What would be a passing score? To come up with this, you can use what seems to you the lowest level of performance acceptable on the job. Or, you can get advice from subject matter experts (SME) who may have an opinion. One thing to keep in mind is that you may have different passing scores for the different learning objectives within your training–maybe some objectives are absolutely critical and require 100% passing, whereas others are less critical and 80% is OK.

The Importance of Feedback and Consequences

There are a number of things that are proven with data to improve the effectiveness of training. Providing feedback to the learners is one of those.

You can and should provide feedback to employees during training, but don’t neglect to do that during assessment as well. Remember that testing is another fantastic learning opportunity.

Be sure to build the opportunity for feedback into your tests. If a worker is right, make sure you explain, demonstrate, or discuss why the worker is right. If the worker is wrong, make sure you explain, demonstrate, or discuss why the worker is wrong and help the worker move toward the correct option.

The same is true when a worker is being assessed on the performance of a specific job skill or task. Always set up the assessment so that the worker can see the consequences of his or her choices, behaviors, decisions, and performances. And always be prepared to discuss those consequences further.

In short, remember that all forms of feedback are exceptionally valuable learning opportunities.

Evaluating Your Tests

You’re not done just because you’ve created your tests.

You should evaluate them to make sure they’re what we might call “good,” although you’ll soon see that there are different words and measurements for how “good” a test or test item is.

Good Test Are Both Valid and Reliable

A good test is both reliable and valid. Reliable and valid are specialized terms used to describe tests/test questions. In short, this is what they mean:

- Valid-A valid test is accurate. If an employee passes a valid test, that employee really can satisfy the learning objective. Likewise, if an employee doesn’t pass a valid test, that employee really can’t satisfy the learning objective.

- Reliable-A reliable test gives you consistent results. If an employee can’t satisfy the learning objective, a perfectly reliable test will tell you that every time. Likewise, if an employee can satisfy a learning objective, a perfectly reliable test will tell you that every time.

For a much more detailed explanation, check our separate article on valid and reliable tests.

Tests and Consequences

One thing to think about is: “What are consequences for my workers if they pass or fail this test?”

In some cases, maybe passing means they can go on to the next activity or module. And failing just means they have to take the test again, or they get some feedback from a manager intended to help them pass. In other cases, passing may mean they can be allowed to move on and do their job, and failing may mean they can’t.

There are many factors to keep in mind when determining the consequences of a test, but the fundamental one is probably this: “How important is it that the employees satisfy the learning objectives?” If it’s a nice-to-have but not critical, the consequences should be low. If it’s critical–like there’s a life at stake, for example–the consequences should be very high. So, match the importance of the knowledge/skill with the consequences of the test.

Be sure to communicate the consequences of the tests to your workers. Let them know what your reasons are for providing each test.

Practical Tests

Sometimes a test isn’t going to be perfect. Maybe it won’t be perfectly valid–only “pretty much valid,” if you will. Or maybe it will test most of the learning objectives well but not all of them. Again, this is a case where you’ve got to look back to the importance of the knowledge and skills in the learning objectives, and then ask yourself if it’s OK to have a less-than-perfect test.

In some cases, designing a perfect one isn’t really practical when you look at the time and expense. In other cases, it’s absolutely essential. (See Note 2.)

Beta Testing Your Test Before You Use it For Real

Once you’ve created your test, it can be tempting to rush it into action by giving it to real employees in a real assessment situation. But you shouldn’t. Before you use it for real, give it a:

- Final review

- Beta test with a small number of workers

The Final Assessment Review

Perform a final review of your test before you try it on any workers for real to try to catch any errors you made during the creation process. You might do this on your own or with the help of a subject matter expert (SME). Here are some things to look for:

- The learning objective matches the assessment (you’re using a “knowledge”or “task-based” test correctly, you’re testing the right knowledge or performance, etc.)

- You’ve got the number of test items correct (at least one for each learning objective, more for the most important objectives, multiple test items if a performance has to be performed in different circumstances)

- Test items are a true match for the desired workplace performance: same performance, same difficulty, same circumstances, etc.

Run a Limited Test With a Small Number of Employees

After you’ve reviewed your test, give it a quick beta test with a small number of employees within the target training audience. Consider the following:

- Perform the beta test in the exact same circumstances that the real test will be held

- Make sure you’re delivering to a representative selection of the type of employees who will be taking the test

- Look for confusion or problems from the beta test audience; check their answers for things that everyone gets right or everyone gets wrong, as these outliers may signify a problem

- If you’re using a checklist or rating scale, look for problems with that, including problems reported by the evaluators (maybe they don’t understanding what it requires to get a check or what the different ratings signify)

When to Perform Tests

Now that you’ve got a test, you’ve got at least four options of when you’d deliver that test to your workers. They are:

Before Training

There are at least two reasons to give a test before your training. The first is if you need to make sure your employees can perform any necessary prerequisites that are required for your training. The second is to create a pre-test that might allow workers to “test out” of all or part of your training.

In addition, you can compare the results of the test before training with results of the test after training to measure how much you’ve closed your gap.

During Training

You might provide some form of test during training to provide practice, give an opportunity for feedback to the employee, and let the employee judge where he/she is in terms of satisfying the learning objective.

Immediately after Training

This is the classic use of a test–at the end of training, to see if the employee can satisfy the objective(s).

Some Period of Time After Training

It might be worthwhile creating some form of test for later, after the employee has been on the job for a while. That’s because people often forget things after a short period of time. In fact, because people tend to forget things over time, it’s often recommended to provide refresher training–followed with a test. (See note 4.)

For more on this, see our articles on spaced learning and refresher training below:

- Beat the Training Forgetting Curve

- Using Spaced Practice to Support Memory After Training

- Evidence-Based Training & Spaced Learning: An Interview with Dr. Will Thalheimer

Evaluating Your Tests Once They’re Implemented

Once your test is “out there,” you’ll begin acquiring plenty of data about it. You can use this data to evaluate your test and, if necessary, revise it.

The kind of information you can use in your evaluation includes:

- Verbal reactions from your employees

- Average score on the test

- Score range for the test

- Percent of workers who pass and fail

- Percent of workers who never finish the test (and, hopefully, why)

- Questions everyone gets right (maybe these are too easy?)

- Questions everyone or a large number of people get wrong (maybe these are too difficult, not matched to your objective, or are written in a confusing manner?)

- Verbal feedback from your evaluators using checklists and rating scales

You’re never really done creating any training material, and that’s true of your tests as well. You can constantly evaluate and revise when necessary. You should build the evaluation step into your process so it becomes a routine, part-of-the-job kind of thing. If you find something that needs rework, no big deal–just go back and make it better.

Conclusion: Testing Workers After Training

There you have it, a few ideas about testing and training. Hope you found some of this helpful and are able to put it into practice at work. Let us know if you have thoughts or questions.

And don’t forget these other articles about workforce testing as well:

- Writing multiple-choice questions

- Writing true/false, matching, dragging, and other types of questions

- Using scenario-based learning and assessments

- Testing and fidelity

- Testing, reliability, and validity

- The “testing effect” and the forgetting curve (included in this article)

Recommended Works about Testing

I researched the following works while researching this article, referenced them throughout the article (see the Notes), and highly recommend that you check them out.

- Develop Valid Assessments, Patti Shank. Infoline: Tips, Tools, and Intelligence for Trainers, ASTD/ATD Press, December 2009.

- Evaluation Basics, Donald V. McCain. ASTD/ATD Press, 2005.

- Assessment Results You Can Trust, John Kleeman and Eric Shepherd, QuestionMark White Paper

- Brain Science: Testing, Testing-Thy Whys and Whens of Assessment, Art Kohn. Learning Solutions Magazine, April 20, 2015.

Also, even though I didn’t reference these “testing” blog articles by Connie Malamed while writing this article, I recommend them nonetheless. I’ve read one or more of them in the past and remember they’re helpful, plus I just tend to find her stuff excellent.

Actually, now that I have written this, I’m going to make it a point to review these articles and see if there isn’t some helpful information I can fold into this post (I’m sure there is).

1. I took the dart and dartboard visual analogy from the “Assessment Results You Can Trust” (Kleeman and Sheperd) white paper noted above in the Recommended Works section. It’s worth checking out their article for many reasons, but the have visuals of this dartboard idea and it really brings the concepts to life immediately. Well done, you two.

2. Many resources on tests and test items talk about validity and reliability, but the Develop Valid Assessments book by Shank referenced above also introduced the concepts of “stakes” and “feasibility.” I thought they were worth including and discussing, but renamed them consequences and practicality.

3. The Shank book mentioned above introduces the idea of test and performance assessments. I’ve reworked it a bit to be knowledge tests and task-based tests.

4. The “Brain Science: Testing, Testing, Testing-The Whys and Whens of Assessment” article by Kohn mentioned noted above does an especially nice job of explaining this point (which is kind of big in learning and developing circles these days, it seems). The article mentioned is part of a series he’s working on, and it seems like the entire series will be relevant and of interest once he’s completed it, so keep your eyes on that.